By Irakli Machaidze, a Georgian political writer, analytical journalist and fellow with Young Voices Europe. Irakli is currently based in Vienna, Austria, pursuing advanced studies in International Relations. He specialises in EU policy and regional security in Europe.

As the world’s first sweeping AI law, the AI Act is a bold statement in the EU’s quest for digital sovereignty. But amid the urgency to break barriers and set precedents, cracks in the foundations emerge, particularly around human rights and stifled innovation in the private sector. Despite Brussels’ keenness, regulation is moving in the wrong direction.

Brussels clearly hopes the EU’s AI Act will set the bar worldwide and become the standard of AI regulation. EU legislators cite the “Brussels effect” as the turbocharger behind the lightning-fast approval of this landmark legislation. The motivation behind this mammoth piece of legislation is not just protecting European consumers and regulating the use of AI in Europe. There’s something else at play here: good old-fashioned fear.

Europe doesn’t want to be left behind the rest of the world. If Europe doesn’t step up its game in the digital arena, regulators worry, other countries will swoop in and steal the spotlight, leaving the EU trailing. That raises questions about what Brussels hopes to achieve with its regulations. Is it thinking about policy or worried about politics? Although undoubtedly a significant legislative milestone, the EU’s AI Act nevertheless lacks sufficient safeguards for human rights.

Despite its emphasis on privacy and fundamental rights, the Act’s numerous exemptions pose potential risks, particularly for vulnerable groups. Under the AI Act, high-risk AI systems undergo mandatory impact assessments, covering sectors like insurance, healthcare, and banking. But some doors remain open, with exceptions for biometric identification in law enforcement, subject to judicial authorisation and for specific crimes.

The U.S. Shouldn’t Go the Way of Europe on AI

Washington should unleash open-source AI, not regulate it. #USA #AI #ArtificialIntelligence #Regulation #Europe #UK #AIact #SafetyTips #CyberSecurity #Commentary by @RealJDenton https://t.co/Mrqv35a7Oi

— The European Conservative (@EuroConOfficial) May 7, 2024

It’s not hard to envision a scenario where law enforcement invokes national security interests to exempt their use of AI. Likewise, there’s a loophole in the Act’s ban on emotion recognition, limited to education and workplaces, enabling its deployment elsewhere, like at border crossings. This has worrying implications for liberty and privacy. Do we really want law enforcement to be able to use this technology at the border? As regulations navigate these complexities, striking a delicate balance between fostering innovation and safeguarding individual rights remains imperative.

Europe’s stringent regulatory standards risks stifling innovation by hampering entrepreneurial spirit and impeding technological progress. That’s the reality for many start-ups in Europe already grappling with the weight of data regulations like the General Data Protection Regulation (GDPR).

Intended to protect privacy, these rules inadvertently hand the advantage to tech giants who can navigate the maze of compliance, leaving innovative newcomers gasping for breath. The numbers don’t lie: because of adapting regulations like this, just two of the world’s top 30 tech firms hailing from the EU and a mere five of the 100 most promising AI startups calling Europe home, it’s clear regulatory hurdles are stifling the continent’s entrepreneurial spirit.

The EU’s tech scene lacks big players. Where are the European versions of Microsoft, Google, or Apple? To thrive in the digital age, Europe must heed the call to embrace a more innovation-friendly regulatory framework, one which nurtures entrepreneurship and considers not only the state-centric, demanding and contentious security implications that AI can bring.

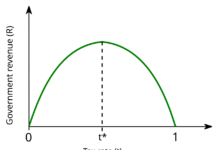

In today’s rapidly evolving landscape of innovation, striking a harmony between regulation and progress is essential. Strangling business growth, curtailing market access, and blocking startup acquisitions through poor regulation could spell disaster for innovation, stifling the emergence of groundbreaking technologies.

With the AI Act, there’s a risk of compromising fundamental rights while burdening startups with stringent regulations, hindering their potential to soar like their US and Chinese counterparts. Ensuring consistency in data protection and usage rules across both public and private sectors is crucial for fostering an environment where innovation can flourish on a level playing field.

Disclaimer: www.BrusselsReport.eu will under no circumstance be held legally responsible or liable for the content of any article appearing on the website, as only the author of an article is legally responsible for that, also in accordance with the terms of use.